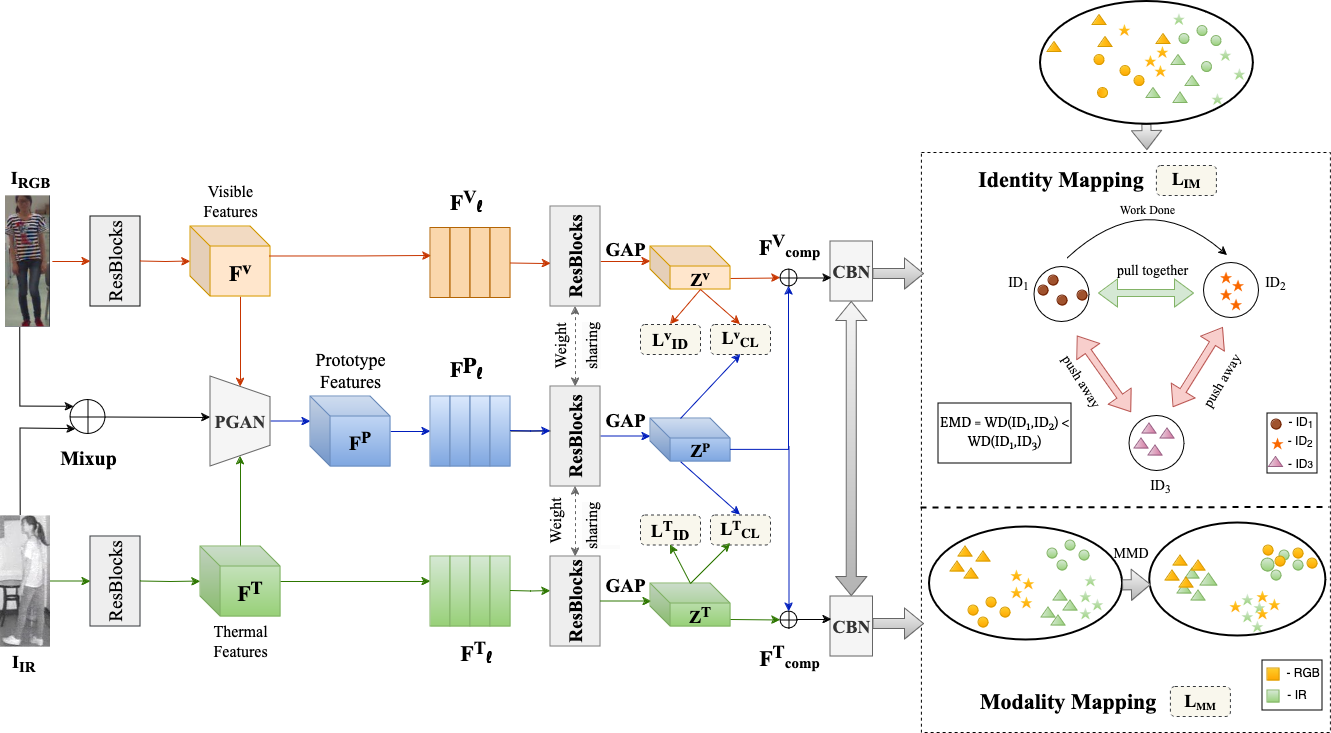

Person Re-Identification (Re-ID) aims to match individuals across different camera views, making it a crucial task in applications such as security, crowd management, and surveillance. However, this task presents several challenges, including variations in illumination, occlusion, and the presence of heterogeneous modality data. Existing methods struggle with domain discrepancies and feature misalignment, particularly between RGB and infrared (IR) images, limiting their generalization to unseen environments. These limitations hinder performance in real-world scenarios like crowded surveillance and cross-modal tracking. To address these challenges, a robust and adaptive framework is needed—one that can learn discriminative cross-modal features while continuously adapting to new data. Optimal Transport Theory (OTT) offers a powerful solution by aligning feature distributions across modalities, ensuring perceptual similarity and improving cross-domain generalization.