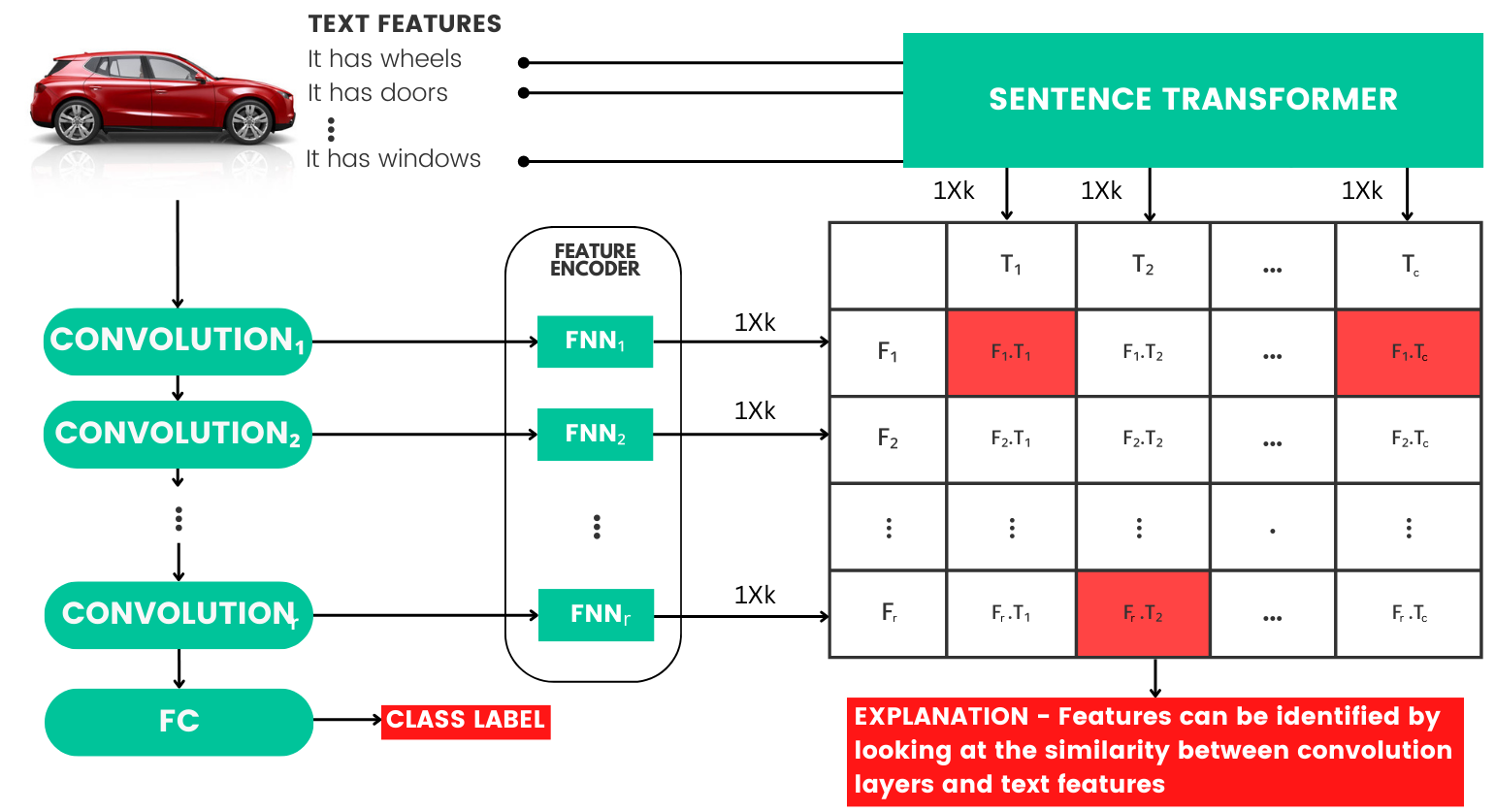

Much of the current deep learning image classification models are considered black-box and lack explainability. Existing explainability methods fail to deal with the trade-off between accuracy and interpretability and also fail to give user-friendly explanations. In my research, we see the effect of textual data in giving explanations by adding it to the final layer and also see the increase in the classification accuracy of the model. We also propose another simple yet effective text-guided training so that the model learns to match the textual features with the visual features. We have also explored the effect of explainability in increasing the classification performance or generalization of the model. While previous methods generate explanations from the final layers, we achieve it from inside the network giving a high degree of transparency and explainability.